Deepfakes and American Law

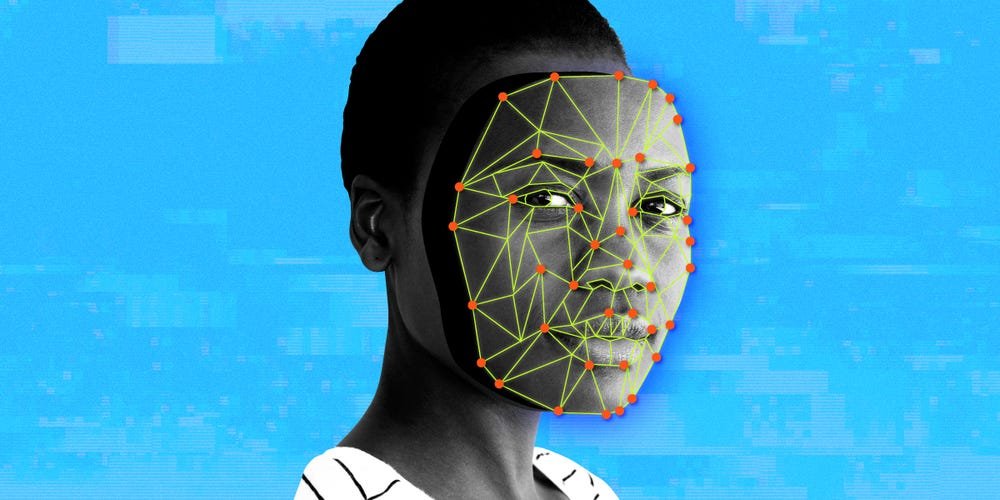

Samantha Lee/Business Insider

Anything can be accomplished with and by artificial intelligence nowadays. Economists can determine the future state of the economy using A.I. and computers can outsmart chess grandmasters in a matter of seconds. And now, anyone can make a video of someone doing or saying anything.

What Are Deepfakes?

Deepfakes are a form of AI which utilizes a form of “deep-learning” in order to edit another person into audio or video, or to create new audio or video. Think about the popular website “ThisPersonDoesNotExist”, or infamous videos of politicians like Nancy Pelosi, with their speech slowed down to make them appear drunk or inept. The latter example is categorized “cheapfakes” due to the poor quality and obvious falsity of the photo or video. As well, cheapfakes take significantly less time and energy to produce. Deepfakes, as we know them today, originated in 2017 from Reddit user u/deepfakes. Using open-source face-swapping technology, like the popular Snapchat filter, they edited celebrities like Gal Gadot and Scarlett Johansen into pornographic films. And since then, deepfakes have become a common part of modern culture — which has been detrimental to modern culture.

Deepfakes can largely be divided into two categories, pornographic or informational. Pornographic deepfakes are largely self-explanatory. Someone, usually female and famous, has their face (and potentially voice) edited into a pornographic video. This makes it appear as though they are partaking in the sexual acts on screen and is thus a form of nonconsensual pornography.

Alexandria Ocasio Cortez, for example, is a frequent victim of deepfake pornography, with titles such as “Porn: AOC do anything for congressional votes deepfake.” Such content is clearly intended to humiliate and degrade her position as a woman in Congress, which is likely a core part of the appeal to the viewers. While deepfakes of men do exist and are extremely harmful, it is clear that deepfake pornography is used as a targeted attack on women far more often. Such attacks are not limited to celebrities and politicians however, even “civilian” women are subjects of deepfake pornography, usually as victims of “revenge porn.” Posting images of ourselves has become commonplace on the internet, so how could anyone possibly comprehend the ways in which these pictures could be used against us? Anyone could falsely put someone’s image into sexual media without their consent or knowledge for all to see. Pornographic deepfakes account for about 90-95% of deepfake content on the internet.

Informational deepfakes on the other hand, are the type of posts that are more likely to come up on a social media timeline. They range from the aforementioned videos of politicians singing funny songs to Elon Musk’s face on Miley Cyrus’s body, to Nixon announcing the failure of Apollo 11. Despite the often comedic nature of these videos and their small quantity, they can still impact us all in extreme ways.

While the “cheapfake” of Nancy Pelosi slurring her words together was debunked quickly by major news outlets, it was not without impact. The video itself gained over 2 million views and sparked outrage among conservatives, who believed it to be true. In fact, later that year, Senator John Kennedy stated that Nancy Pelosi “needs to go to bed. She’s drunk,” after she refused to negotiate on the coronavirus spending bill back in 2020. Even cheapfakes can have huge implications, but what about the more realistic stuff?

In 2020, a group used manipulated audio to defraud a Hong Kong bank for $35 million. In another case, EU Parliamentarians reportedly had a video call with the deepfake version of Leonid Volkov. And across the internet, hundreds of pornographic deepfakes, even of non-celebrities and non-politicians, have spread like wildfire. The AI used to create deepfakes becomes more advanced every day. We have gone from being able to mediocrely manipulate a 30-second video using hours of footage and time to having access to programs that can make any person appear nude using only one photo of them clothed. How can we know what is real or what is fake anymore? And can we do anything about it?

Deepfakes in a Post-Truth World

The divide between truth and falsehood has become hotly debated in recent years, thus giving way to the idea that we live in a post-truth world. This statement does not mean that truth does not exist entirely, but that truth matters less than personal belief. Think of it as a kind of anti-empiricist renaissance. Post-truth has not solely been ushered in by deepfakes. Conspiracy theories, like the idea of “fake news,” distrust of any kind of authority or media outside of one’s group, and even targeted ads to an extent, are larger contributing factors.

But deepfakes could not have achieved the prominence or impact that they have without existing in a post-truth world. And in response, deepfakes have accelerated the rapid pace of the post-truth movement to the extent that we cannot even agree on what is true (or if truth matters), let alone cooperate with one another. One important example of the post-truth apocalypse is the January 6th insurrection. Believing that Biden’s electoral victory was a lie, despite evidence proving that he did fairly win, hundreds stormed the U.S. Capitol building, resulting in seven deaths. Post-truth is not just a matter of arguing with relatives over which news sources are reliable. It is a genuine threat to democracy and society as a whole.

Current Legislation’s Drawbacks and Benefits

Despite the immense threat of deepfakes, there are many, many limitations to legislating them, especially informational deepfakes. Such limitations include Section 230 of the Communications Decency Act, the First Amendment, copyright laws such as Fair Use laws, and nonconsensual pornography laws.

Section 230 is the reason why we can communicate freely on the internet, ranging from silly memes about storming Area 51, to actual plans about storming other important governmental buildings. It states that “No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.” Basically, Twitter, for example, cannot get sued because of what people are saying on Twitter. Individual users can potentially be punished for libel, slander, and more, but the platform itself is not at risk. This also means that the government cannot force websites to ban specific forms of speech or expression, leaving the regulation of speech on the platform up to the owners of the platform. This is great for free speech and enables all kinds of wonderful expression on the internet.

However, this does present some issues when it comes to preventing the spread of nonconsensual pornography, deepfakes, and general misinformation. Even though the original poster can be punished for what they post (which is quite difficult and exceedingly rare), it is impossible to prevent others from reposting the same thing, enabling it to spread like wildfire. Despite the law’s pitfalls, amending Section 230 might not only restrict free expression on the internet but would also fail to be a complete solution to this problem. After all, posting deepfakes or misinformation is not illegal.

Much like Section 230, the First Amendment is an essential part of American democracy that enables and protects free speech and expression. But it also protects those who post pornographic or informational deepfakes. In some interpretations, the creation of deepfakes is an act of expression. In Hustler Magazine, Inc. v. Falwell, the Supreme Court rejected an emotional distress claim about an article accusing a minister of incest as “additional proof of falsity with actual malice was necessary ‘to give adequate’ breathing space to the freedoms protected by the First Amendment when the speech involves public figures or matters of public concern.” Once again, this court precedent is beneficial in the fact that it protects free speech, but it makes it difficult to legislate deepfakes, particularly informational deepfakes.

Another common defense of information deepfakes is that they are not defamatory, but instead, are parodies or satirical. They are thus protected under the First Amendment. And in many instances, this is true; what else could a “Donald Trump And Barack Obama Singing Barbie Girl By Aqua” video be labeled? In a world full of memes in every different form about everything, how can we differentiate?

Copyright laws can be useful in traditional cases of revenge porn as they can allow the victim to “claim” the image and potentially prevent the dissemination of the image. However, they complicate matters in regards to deepfakes. This is because a deepfake is technically a transformative work, which is protected under Section 107 of the Copyright Act. A transformative work means “that the new work has significantly changed the appearance or nature of the copyrighted work” or the original work. Even if some of the images used within the deepfake are already copyrighted, the creator is still likely to win with a fair use claim due to the inherent transformability of the work! In instances of pornographic deepfakes, such images also include the original video on which the person’s face may have been edited onto, or transformed, thus eliminating the possibility of the adult star being able to sue as well.

Laws concerning nonconsensual pornography are generally ineffective in protecting victims of nonconsensual pornography, let alone victims of deepfake pornography. But these laws do have the potential to protect victims. As mentioned previously, deepfake pornography is a form of nonconsensual pornography (or NCP) categorically (as the people involved literally did not consent to the creation of this pornography), but not legally. This means that the copyright protections that have been afforded to victims of NCP are not available for deepfake pornography victims, not even taking into account the copyright restrictions applied to deepfakes. Moreover, this prevents deepfake pornography victims from protection under state laws prohibiting the dissemination of nonconsensual pornography, which have been upheld in court as constitutional under the 1st Amendment. In categorizing deepfake pornography as something separate from nonconsensual pornography, victims are completely and utterly shut out from any form of meaningful legal protection.

The insurmountable challenges surrounding deepfake legislation may invoke a sense of disappointment, fear, or maybe hopelessness. But there are solutions. It is worth noting that most of these solutions pertain to deepfake pornography. It would be impossible to prevent and punish people for posting informational deepfakes without infringing on constitutional rights. We cannot and should not harm the right to freedom of speech and expression, and we must ensure that the rights of the victims and the right of free speech are both equally protected. That being said, no one should have to wake up to find out that their face is consensually on the front page of a porn website and be able to do nothing about it.

The only states with legislation concerning deepfakes are Virginia, Texas, and California. Virginia’s and most of California’s legislation refers directly to pornographic deepfakes, and Texas’s and some of California’s legislation refers to a specific subset of informational deepfakes.

The Virginia Code Annotated § 18.2-386.2 specifically outlaws the dissemination of pornographic deepfakes, but not the creation of such deepfakes. Virginia’s law also only protects against deepfake pornography created with “the intent to depict an actual person and who is recognizable as an actual person by the person's face, likeness.” California’s AB-602, outlaws the creation of deepfake pornography yet also incorporates the law into current NCP law, thus preventing dissemination.

It is clear that on a federal level, a combination of Virginia’s law and California’s AB-602 could provide the necessary protection for victims of deepfake pornography by outlawing the creation and dissemination of deepfake pornography. As well, legally categorizing deepfake pornography as NCP awards victims even more protection. A truly comprehensive and worthwhile piece of legislation would encompass all of the aforementioned aspects, and also allow adult stars whose videos are used in deepfake pornography to take legal action.

Deepfakes pose a genuine threat to peoples’ lives, safety, and to society as a whole. This issue cannot persist and must be legislated on at the federal level in order to protect the public. Congressional representatives need to recognize deepfakes as a threat and pass legislation that can protect victims and free speech.